A Mental Model for Google’s Titans: Memory as Weights vs. Memory as Buffer

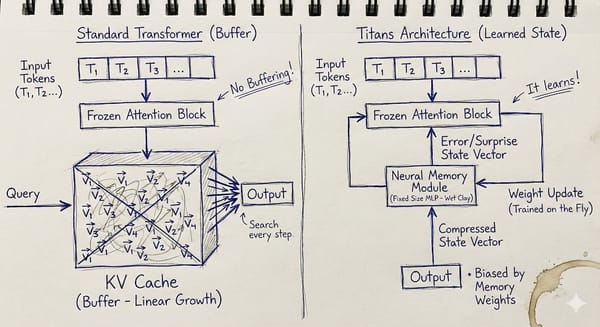

Most current Large Language Models (LLMs) handle long documents by increasing the size of their "context window." While effective, this approach has a fundamental scaling limitation. Google’s new Titans architecture (built on the MIRAS framework) proposes a different mechanical approach to memory. To understand how it works,